Appearance

Caching: It's Good for Your Users — and the Planet

When an image loads in 400ms instead of 4 seconds, your app feels fast, responsive — alive. That tiny, near-invisible win saves time, bandwidth, energy, and money.

Caching isn’t just about speed. It’s about scalability, sustainability, cost-efficiency, and user delight. Once you learn to cache with intention, you won’t build any other way.

To demonstrate caching in action, we’ll use a demo app that delivers joy through Lucky Dube’s music. For simplicity, we'll focus on one cover image — House of Exile — to show exactly how caching transforms performance.

When Should You Cache?

Caching makes sense when users repeatedly access the same or related data. This principle is called locality of reference, and it comes in two key forms:

- Temporal locality: The same data is accessed again within a short time.

Example: A user switches tabs and returns to the same album.

- Spatial locality: Data near or related to the previous access is requested.

Example: After exploring one album, the user browses similar ones.

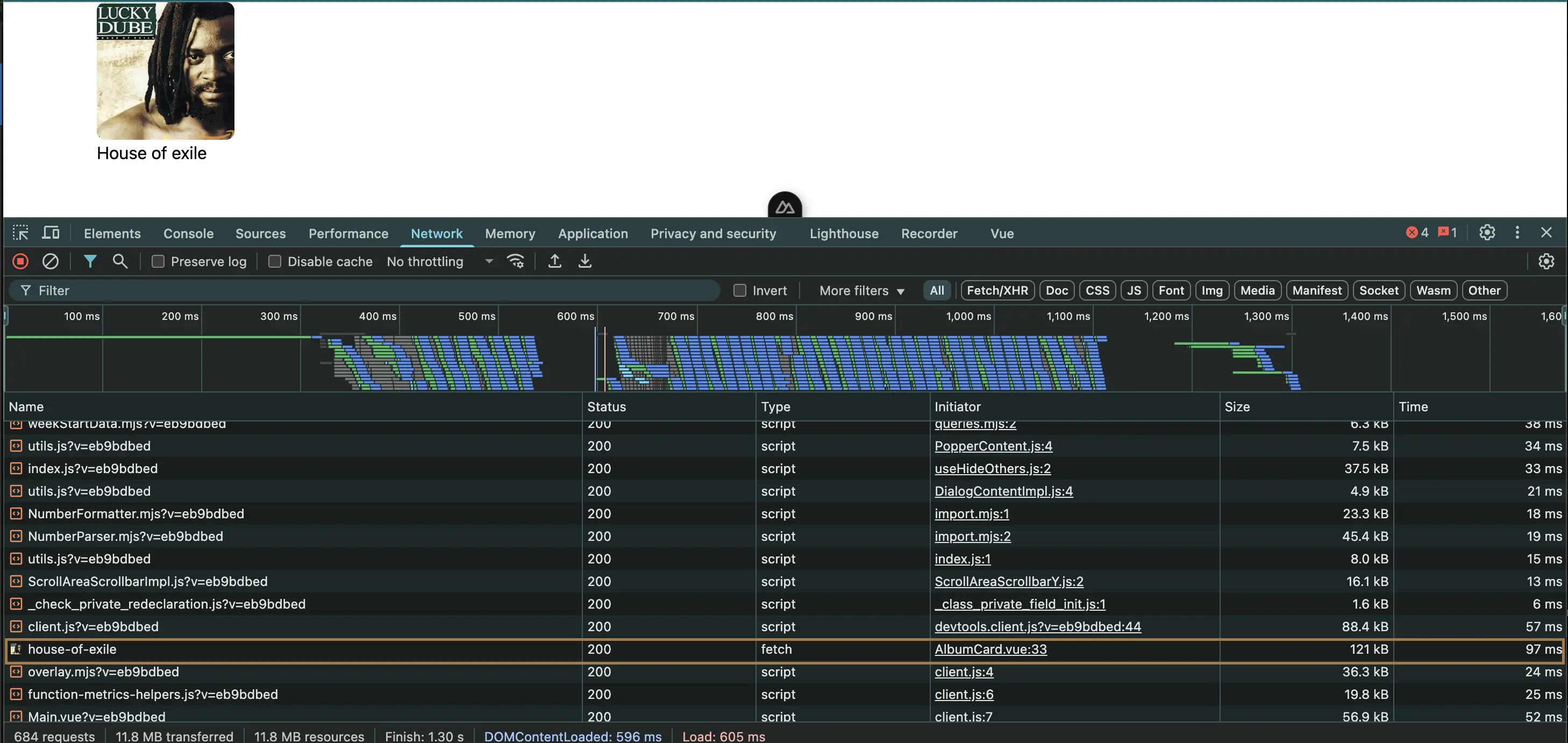

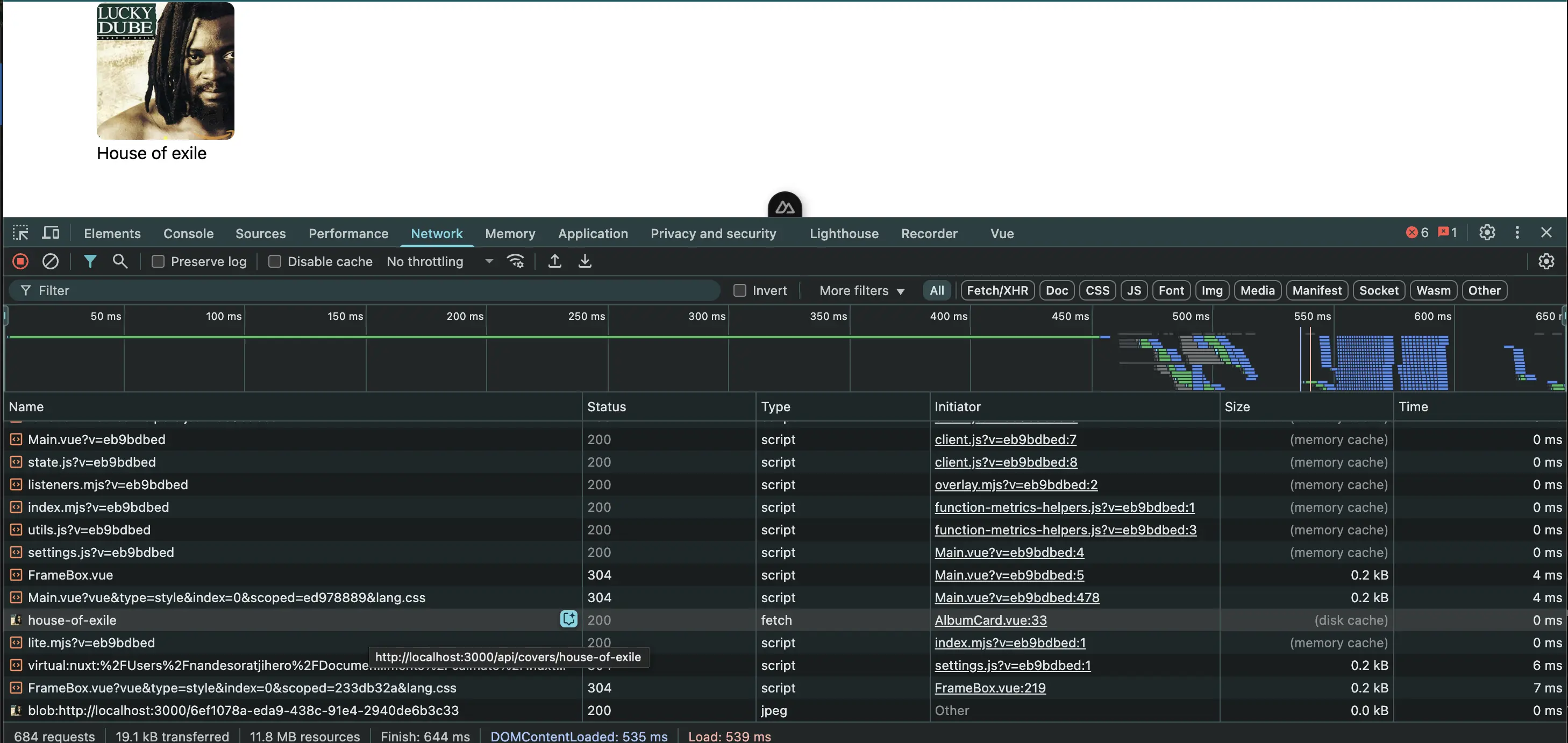

In our app, switching tabs without caching caused redundant network requests for the same album cover. After implementing caching, those requests dropped to zero. Chrome DevTools confirmed (disk cache) responses and sub-millisecond load times.

Why Cache?

Caching isn’t just a performance booster — it’s a business and sustainability decision.

📉 Reduced Server Load

Cached assets don’t hit your origin server. That frees up CPU, memory, and energy for the things that actually need server logic.

Before caching (DevTools):

After caching (DevTools):

Pro Tip: In DevTools > Network tab, a value of

(disk cache)or(memory cache)for the Size column indicates the resource is cached.

⚡ Lower Latency

A resource served from disk cache loads nearly instantly. A Time value of 0 ms in DevTools means it never touched the server — just raw speed. For the House of Exile album cover, latency dropped from 197 ms to 0 ms after caching.

💸 Lower Cloud Costs

If you're deploying to services like Google Cloud Run or Firebase, every request costs something. Reduce redundant requests, and you reduce your bill.

- Third-party APIs with rate limits? Cache.

- Slow database queries? Cache.

- Static assets like album covers? Cache.

🌍 Bandwidth Efficiency and Scalability

Every byte not sent is a byte saved — for your infrastructure and your users. This adds up across mobile networks, low-power devices, and regions with limited connectivity.

Caching makes your app leaner and more scalable — without rewriting business logic.

📴 Offline and Low-Connectivity Support

Service workers enable offline-first behavior. In our app, a user can revisit the previously viewed album even with no internet connection.

That’s not just performance — that’s resilience.

What Can You Cache?

| Resource Type | Examples |

|---|---|

| HTML | index.html, route files |

| CSS | App and utility stylesheets |

| JavaScript | Page logic, modules, libraries |

| Images | Album covers (JPG, PNG, SVG) |

| Fonts | WOFF2, TTF, OTF |

| API Data | JSON responses, paginated results |

How to Cache

Caching falls into two categories: In-Memory (volatile) and Persistent (survives reloads).

🧠 In-Memory Cache (Session-Limited)

Fast, simple, and great for short-lived session data — but wiped on reload.

Example: Nuxt.js

Nuxt allows memory caching via useFetch with a unique key:

vue

<script setup>

const { data } = await useFetch("/api/songs", {

key: "songs",

});

</script><script setup>

const { data } = await useFetch("/api/songs", {

key: "songs",

});

</script>Data is stored in memory and reused across tabs until the session ends.

💾 Persistent Cache (Survives Reloads)

Best for assets you want to reuse across sessions or offline.

Service Worker + Cache API

Used in Progressive Web Apps (PWAs) to intercept requests and return cached responses:

ts

const CACHE_NAME = "lucky-dube-cache";

const CACHE_URLS = ["/", "/api/albums", "/api/songs"];

self.addEventListener("install", (event) => {

event.waitUntil(

caches.open(CACHE_NAME).then((cache) => cache.addAll(CACHE_URLS))

);

});

self.addEventListener("fetch", (event) => {

if (event.request.method === "GET") {

event.respondWith(

caches.match(event.request).then((cachedResponse) => {

if (cachedResponse) return cachedResponse;

return fetch(event.request).then((response) => {

return caches.open(CACHE_NAME).then((cache) => {

cache.put(event.request, response.clone());

return response;

});

});

})

);

}

});const CACHE_NAME = "lucky-dube-cache";

const CACHE_URLS = ["/", "/api/albums", "/api/songs"];

self.addEventListener("install", (event) => {

event.waitUntil(

caches.open(CACHE_NAME).then((cache) => cache.addAll(CACHE_URLS))

);

});

self.addEventListener("fetch", (event) => {

if (event.request.method === "GET") {

event.respondWith(

caches.match(event.request).then((cachedResponse) => {

if (cachedResponse) return cachedResponse;

return fetch(event.request).then((response) => {

return caches.open(CACHE_NAME).then((cache) => {

cache.put(event.request, response.clone());

return response;

});

});

})

);

}

});HTTP Caching with Headers

Control browser and CDN behavior using headers like:

ts

setResponseHeader(event, "Cache-Control", "public, max-age=864000");setResponseHeader(event, "Cache-Control", "public, max-age=864000");This example caches the response for 10 days.

In Nuxt server routes:

ts

export default defineEventHandler(async (event) => {

const cover = await getCover();

setResponseHeader(event, "Cache-Control", "public, max-age=864000");

return cover;

});export default defineEventHandler(async (event) => {

const cover = await getCover();

setResponseHeader(event, "Cache-Control", "public, max-age=864000");

return cover;

});🔁 Conditional Requests: Save Bandwidth, Reuse Assets

Even if a file isn’t cached, the browser may ask: “Has this changed?”

This is done with conditional requests, using ETag or Last-Modified headers.

🧪 ##### How it works

Server (on first request):

yaml

ETag: "abc123"

Last-Modified: Wed, 15 May 2024 12:00:00 GMTETag: "abc123"

Last-Modified: Wed, 15 May 2024 12:00:00 GMTBrowser (on subsequent request):

yaml

If-None-Match: "abc123"

If-Modified-Since: Wed, 15 May 2024 12:00:00 GMTIf-None-Match: "abc123"

If-Modified-Since: Wed, 15 May 2024 12:00:00 GMTIf the file hasn’t changed, the server replies:

yaml

HTTP/1.1 304 Not ModifiedHTTP/1.1 304 Not ModifiedCool thing about this? ✅ Less bandwidth. ✅ No payload. ✅ Instant reuse.

Implementing ETag in Nuxt server routes:

ts

const image = await getCover();

const etag = createHash("md5").update(image).digest("hex");

if (getRequestHeader(event, "If-None-Match") === etag) {

setResponseStatus(event, 304);

return null;

}

setResponseHeader(event, "ETag", etag);

return image;const image = await getCover();

const etag = createHash("md5").update(image).digest("hex");

if (getRequestHeader(event, "If-None-Match") === etag) {

setResponseStatus(event, 304);

return null;

}

setResponseHeader(event, "ETag", etag);

return image;Cache Invalidation: The Hidden Challenge

Caching is powerful — but serving stale content can lead to bugs or confusion. Solve this with:

✅ Content Hashing

Tools like Vite/Webpack create filenames like:

html

<script src="/_nuxt/app.a1b2c3.js"></script><script src="/_nuxt/app.a1b2c3.js"></script>The hash changes only when the content does. This makes the file immutable and safely cacheable forever.

✅ max-age for Dynamic Data

For frequently updated responses, set shorter expiry:

ts

setResponseHeader(event, "Cache-Control", "public, max-age=600"); // 10 minutessetResponseHeader(event, "Cache-Control", "public, max-age=600"); // 10 minutes✅ Query Strings for Static Files If you’re serving custom files from /public, use query parameters:

html

<script src="/scripts/main.js?v=3.2.0"></script><script src="/scripts/main.js?v=3.2.0"></script>Browsers treat each version as a new request.

Conclusion

Caching isn’t magic — it’s engineering. Start with one asset. Cache it well. Measure the difference. Then do it again. Your future users — especially the ones on flaky 3G in a faraway place — and the environment will thank you.